One of the major obstacles standing in the way of an effective cybersecurity strategy is getting full attack surface visibility because, as everyone knows, you can’t protect what you can’t see.

With visibility, there are two major things every organization needs to do:

- Achieve full attack surface visibility

- Continuously maintain it

Many continue to struggle with either the first or the second, mainly because the tools that promise to deliver continuous visibility fail to map the full extent of modern digital infrastructure, which keeps changing all the time.

So, what is it that should be mapped? What is usually forgotten? How does one both achieve and maintain the visibility — and, on top of this, know that it is truly complete? Let’s try to unpack it.

What Is Attack Surface Visibility?

Attack surface visibility is the measure of how clearly you can see your organization’s entire digital footprint and the vulnerabilities it has. It’s more nuanced than just counting IT assets — attack surface visibility is about understanding the risk exposure of those assets.

In other words, complete visibility means knowing not just what you own, but also how it looks to an outsider, specifically one with bad intentions. If the visibility is incomplete, threat actors might discover potential vulnerabilities before you do.

The Key Components of Attack Surface Visibility

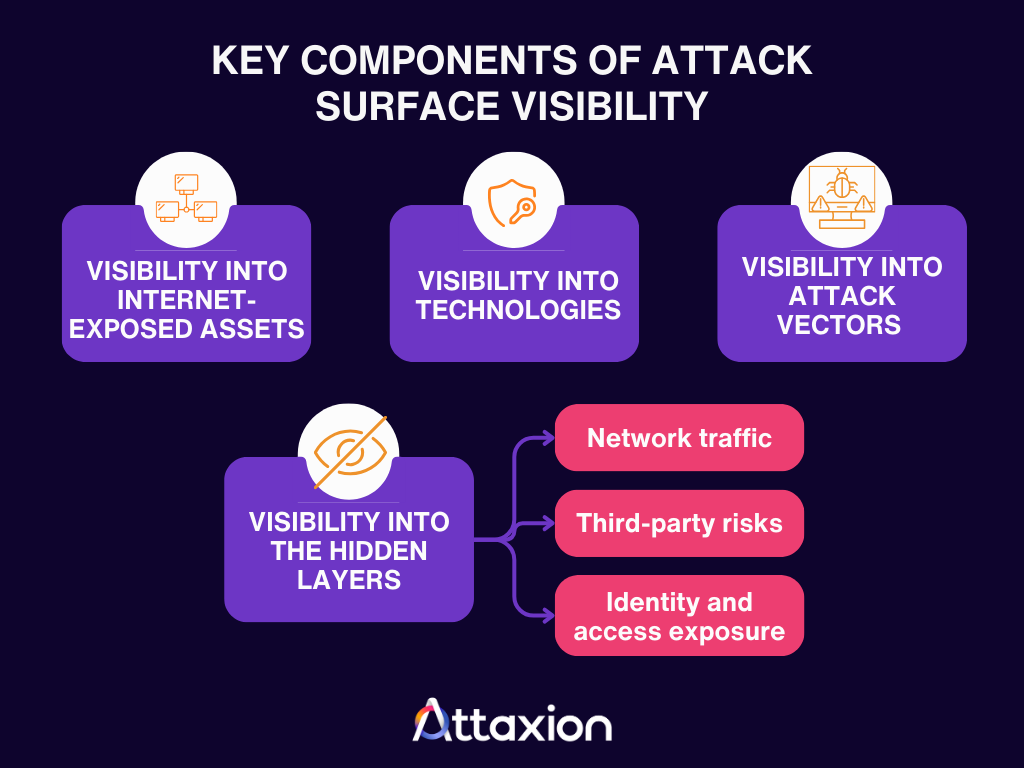

To get complete attack surface visibility, you need to break it into actionable categories. In this article, we’ll divide it into these four:

- Visibility into internet-exposed assets

- Visibility into technologies

- Visibility into attack vectors

- Visibility into hidden layers (namely network traffic, third-party risks, and identity/access exposures)

1. Visibility into Internet-Exposed Assets

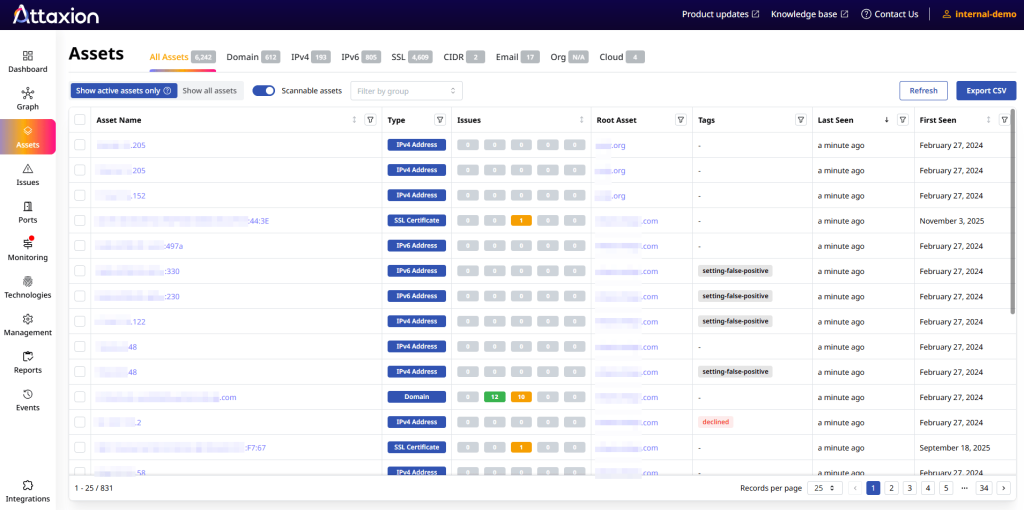

Better asset discovery coverage equals better visibility. This means that you need to

- inventory everything and

- determine which of those assets are exposed to the public internet.

This discovery covers your main corporate website, marketing landing pages, legacy email servers, cloud infrastructure, SaaS applications, and other external-facing assets.

Asset discovery must also find assets that are unintentionally exposed, perhaps due to a simple misconfiguration that left a database or server wide open to the public web or a shadow application downloaded by an employee.

How to achieve it

To gain visibility into these exposures, you must see your infrastructure through the adversary’s eyes. This external perspective is delivered through Open Source Intelligence (OSINT) techniques combined with active probing. OSINT-based discovery tools actively search the public web for your organization’s digital footprints, finding all assets — including those unintentionally exposed — and picking up the digital breadcrumbs your organization has unknowingly left behind. Active probing helps validate the assets while also helping find some bits and pieces that OSINT may have missed.

- Explore asset discovery tools and pick one that offers the highest asset coverage.

- Two main metrics for the tool here are the coverage and the number of false positive assets that it wrongly attributes to your organization.

- There are no tools that don’t generate false positives and cover everything, so, when it comes to false positives, focus on the number of false positives and how easy (or not easy at all) it is to clean up the inventory from them.

2. Visibility into Technologies

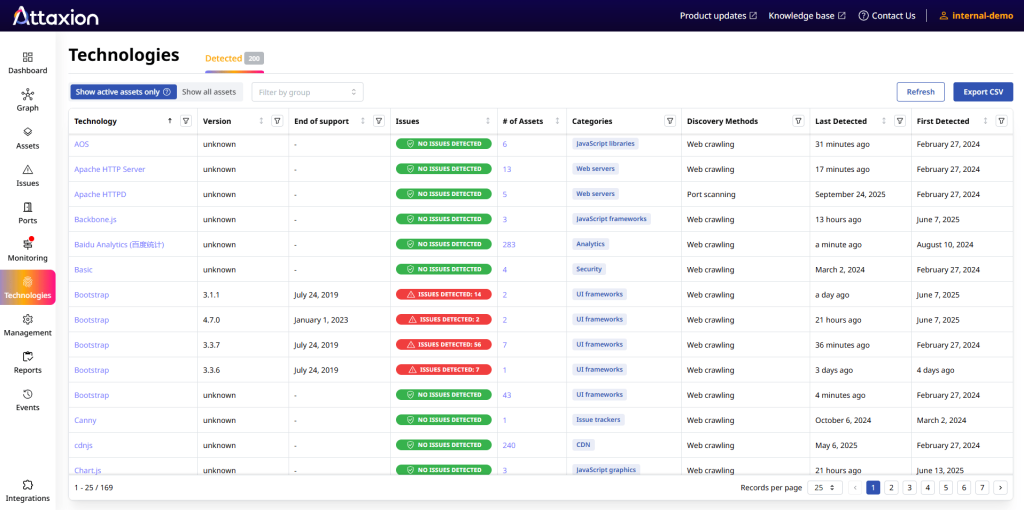

Once you have the complete asset inventory, you need to know what is running on those assets, so you need visibility into technologies through technology fingerprinting.

Is that server running on Apache or Nginx? What version of PHP is the application using? Is the SSL certificate about to expire? Better port scanning, certificate transparency (CT) logs, and web crawling capabilities lead to better visibility in this area. If you don’t know the technology stack, you can’t identify the vulnerabilities associated with it. You need to fingerprint the operating system, applications, and the services listening on every port.

How to achieve it

- EASM and exposure management platforms often include a combination of port scanning, web crawling, and SSL lookups to get you all this information in one place, without having to go through individual mini-tools.

- Some of these tools have a dedicated technology inventory tab that shows you every tech the scanners have detected, including its version (and if the version is known to be vulnerable).

- However, these tools do not analyze software libraries and dependencies used when developing applications, so you’ll still need to work with software developers in your organization and get software bills of materials (SBOMs), ensuring that they are regularly updated.

3. Visibility into Attack Vectors

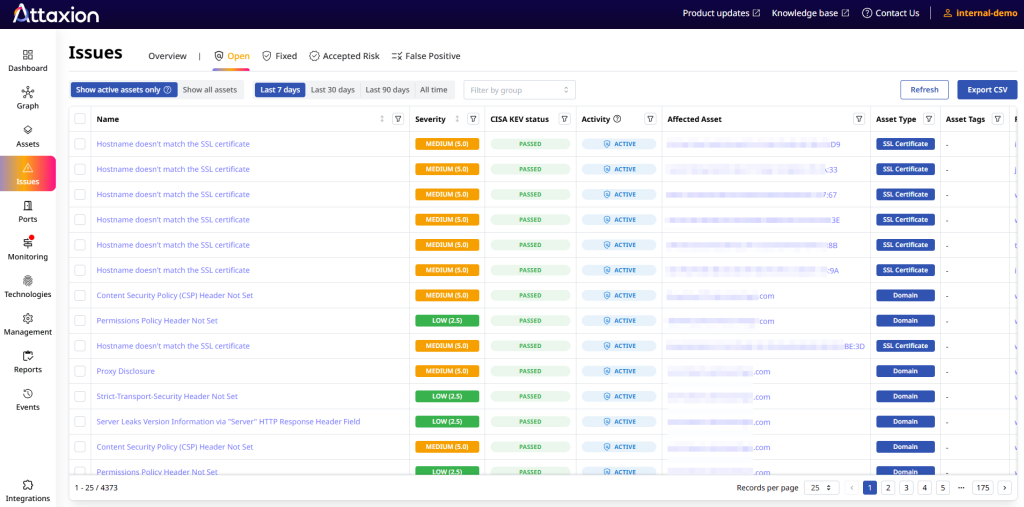

Visibility into attack vectors lets you identify specific holes in your digital environment, and this is where you need vulnerability scanners that can look at your technology stack and detect security weaknesses and misconfigurations.

How to achieve it

- Better scanners result in better visibility. But in practice, there’s a lot more to it. Firstly, there’s the usual choice between higher coverage and higher false positives vs. lower coverage and lower false positives. All vendors say that their scanners have low false positive rates and high coverage, so you’ll probably need to try out different scanners to understand which one delivers the best results for your specific environment.

- Theoretically, the false positives problem can be solved with validation, the process of proving that a vulnerability really exists and the potential attack paths. But since this could involve sending payloads that can take systems down, it’s worth remembering that validation has its own downsides as well when choosing a scanner.

- Some tools (such as Attaxion) combine dynamic application security testing (DAST) and infrastructure scanning, while others offer only web application scanning or only scan servers. With the former, you can achieve full visibility with just one tool, but if it’s the latter, you’ll need two or more that cover all types of scanning.

4. Visibility into the Hidden Layers: Traffic, Identity, and Third Parties

You may stop at discovering assets and their vulnerabilities and call it a day. But there are other layers to attack surface visibility that the previous steps did not cover:

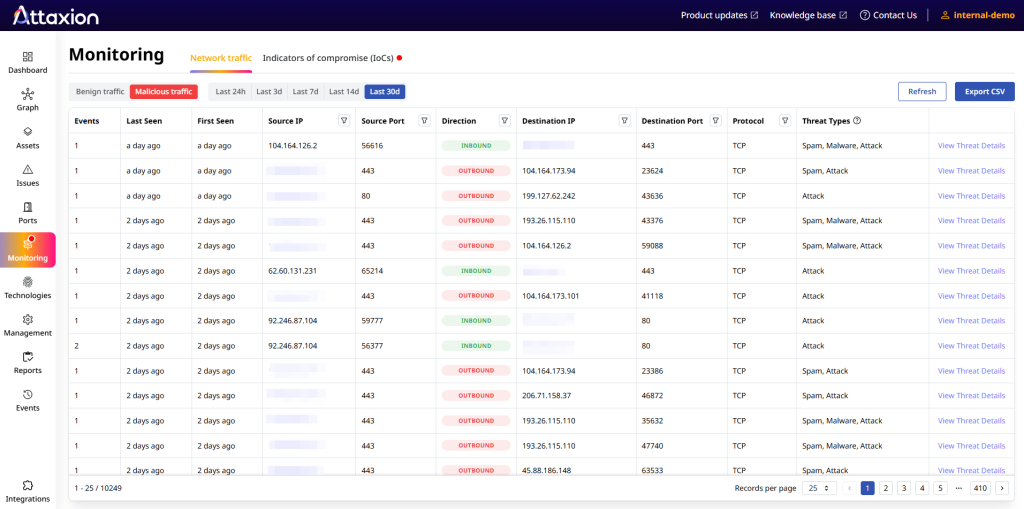

- Network traffic: It’s good to have visibility into network traffic to see what is actually going on. Are your assets communicating with known malicious IPs? What IP addresses are your infrastructure IPs talking to on open ports? Traffic and network topology analysis bring your static asset inventory to life. This is where network security monitoring tools come in handy.

- Third-party risks: You rely on SaaS providers, marketing agencies, cloud environments, content delivery networks (CDNs), and many other vendors. Since no organization is an island, you need visibility into third-party risks as part of your third-party risk management (TPRM). If a vendor you rely on is breached or has poor security practices, that becomes your security risk. The problem here is that you, of course, can’t get the same level of visibility into third parties as you get into your own attack surface. But TPRM tools can help you choose vendors that at least care about how their security posture looks from outside.

- Identity and access exposure: ISACA experts are saying that identity is the modern perimeter. You need visibility into account privileges, weak authentication policies, exposed credentials, secrets, and leaked credentials. It doesn’t matter how proactive your patch deployment effort is if the admin password is “password123” and it’s floating around on the dark web.

Why Is Attack Surface Visibility So Important?

Uncovering shadow IT

The cliché “You can’t protect what you can’t see” gets thrown around at just about every security conference and board meeting (and even in this article, twice already, we admit). But it’s the truth. Your attack surface is what attackers can see, and there’s a chance they might discover parts of it that you don’t have visibility into.

One of the biggest drivers for better visibility (and ultimately attack surface management) is the need to reduce risk from shadow IT. These are digital assets you own but aren’t managing. Shadow assets are unpatched, unmonitored, and easy targets. Without a discovery tool that scans the entire internet for your footprint, these assets pose a shadow risk as they may remain invisible to you until they are compromised.

Faster Incident Response

Attack surface visibility is also key to fast vulnerability detection, remediation, and incident response. When a new critical vulnerability drops (think Log4Shell or similar zero-days), the race is on. Attackers are scanning for it immediately, so you need to be faster. If you have a complete, searchable inventory of your exposure, you can ask, “Where are we running Log4j?” and get an answer in seconds. But if you lack visibility, you are stuck manually checking servers one by one while the clock ticks and your security posture suffers.

Compliance

Finally, there is the regulatory angle. Visibility is often needed for compliance. Frameworks like Service Organization Control 2 (SOC 2), ISO 27001, and General Data Protection Regulation (GDPR) essentially require you to have a handle on your data and your assets. Proving to an auditor that you know exactly what you own and how it is secured is much easier when you have automated visibility tools in place.

How Attaxion Helps You Achieve Maximum Attack Surface Visibility

By now, it should be clear that attack surface visibility can’t be handled manually. The internet is too large, and infrastructure changes too quickly. But automation alone isn’t enough. The real challenge is getting visibility that’s both broad and usable.

Many attack surface management tools and exposure management platforms lean too far in one direction. Some focus on finding everything but generate so much noise that teams struggle to act on the results because of alert fatigue. Others try to reduce false positives but end up missing assets or exposures. In practice, visibility only works when coverage and signal quality are balanced.

Attaxion is designed to help you achieve that balance.

- Special focus on asset discovery: Attaxion combines a wide variety of discovery techniques with high-quality data sources for DNS, WHOIS, and SSL data, and some advanced logic on top of it to build a solid asset inventory. One of the reasons for switching to Attaxion that we keep hearing from our customers is that Attaxion discovered things other tools missed while not flooding them with false positives.

- Scanning with rich context: Scanning shouldn’t break your production environment. We combined our own technology fingerprinting engine with a number of tools that scan for different vulnerability types and added ZAP on top to make sure Attaxion offers non-intrusive but effective vulnerability scanning for both infrastructure and web apps. We also went a step further by adding rich context, such as data from multiple vulnerability databases (NVD, EUVD, JVNDB), scores (CVSS or EPSS), and real-world exploitation data coupled with remediation suggestions to give you as much information as possible for prioritization and remediation.

- Continuous monitoring: A scan you ran last month is useless today. New ports open, certificates expire, and new vulnerabilities are disclosed. Attaxion performs continuous scanning for continuous visibility. We monitor your environment in real time so that you quickly know about exposure changes as they happen.

- Visibility beyond assets: Attaxion also looks at the broader ecosystem. We provide visibility into network traffic and deeper insights into your digital infrastructure. Specifically, we offer visibility into whether you share infrastructure with something malicious. If your server is hosted on the same IP block as a known malware command-and-control server, your reputation (and email deliverability) could suffer. Attaxion helps you see that risk.

As your organization grows, your attack surface expands, and the opportunities for attackers increase. Security teams cannot rely on static spreadsheets or annual penetration tests to give them the full picture.